As Generative AI is gaining traction in media and in business, UX & UI designers around the world are starting to wonder if and how AI will change the way we practice design. Will AI become a part of the creative process, as co-creators or as replacements to human designers? Personally, I believe we are approaching a new way of doing design, especially when it comes to the boundaries between design and development, which can truly become blurred with AI-assisted design and coding tools such as Claude Code, Cursor, and Figma Make.

Read also: Best UX/UI Design Software and Tools for 2025/2026

Last updated: Nov 2025

What I’ve tried

In the past two years, I’ve made an effort to try out as many generative AI tools as possible, in the context of what would be usable in an AI-assisted design workflow. The tools I’ve tried include:

- ChatGPT (LLM)

- Claude (LLM)

- Claude Code (AI code)

- Cursor (AI code)

- Figma Make (AI design)

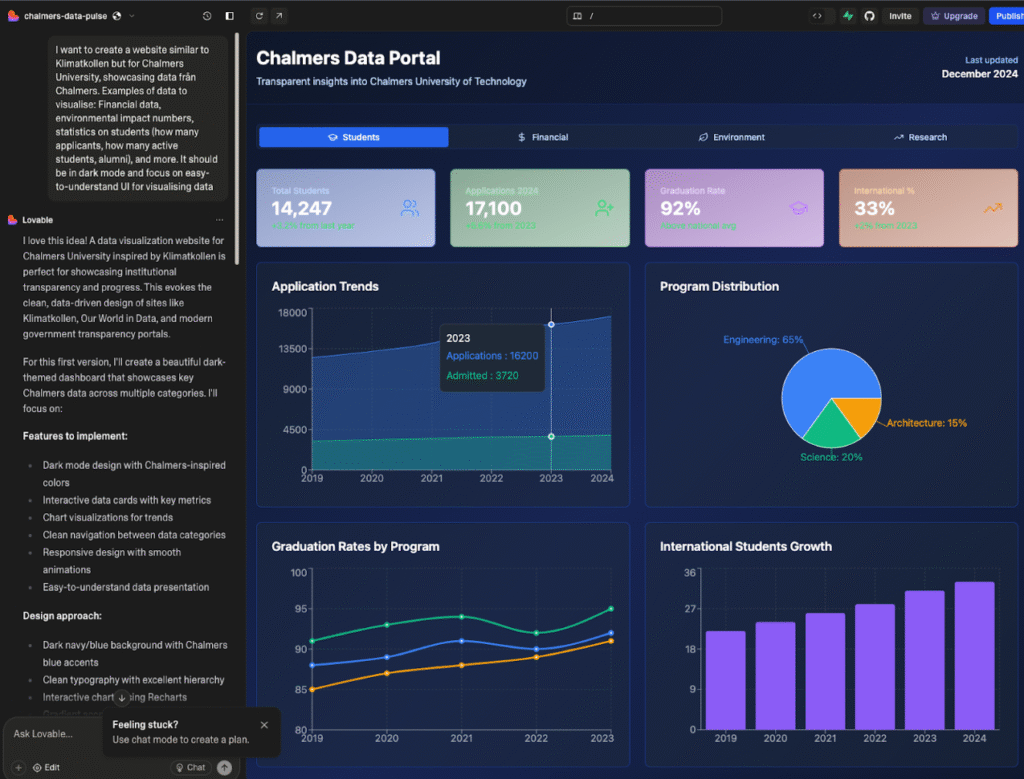

- Lovable (AI web design)

- Replit (AI app builder)

- Canva (AI graphic design)

- Adobe Photoshop and Adobe Illustrator (AI photo and illustration editing)

- MidJourney (AI images)

- Leonardo AI (AI images)

- Blender AI Render Plugins (AI 3D modelling)

- Pika Labs (AI video generator)

- Runway (AI video generator)

- Eleven Labs (AI voices)

- Sana (AI work agent assistant)

- N8n (AI workflow automation)

- Microsoft Copilot (AI companion)

- and more

You can read about my explorations with image and sound generation software here: (2024) How useful is Generative AI for Creative Work?

What genuinely surprises me is the speed of development of these technologies. Just two years ago, the user interface designs I generated with tools such as ChatGPT and Midjourney were laughable. They were not up to the standards of a real production environment. However, during the summer of 2025, Figma Make was released. While it is still not replaceable for human designers, it feels lightyears ahead of my past experiences with generating UI with general-purpose tools such as ChatGPT.

This development indicates that we have not seen the end of generative AI tools for UI design. If anything, I believe we are only in the beginning. If such huge improvements in technology can happen in two years, what wouldn’t be possible in 5, 10, or 20 years?

Currently, I do not feel generative AI can replace designers in any part of the design process, but I believe it is too early to write it off. I don’t think we’ve seen the best possible generative AI capabilities yet.

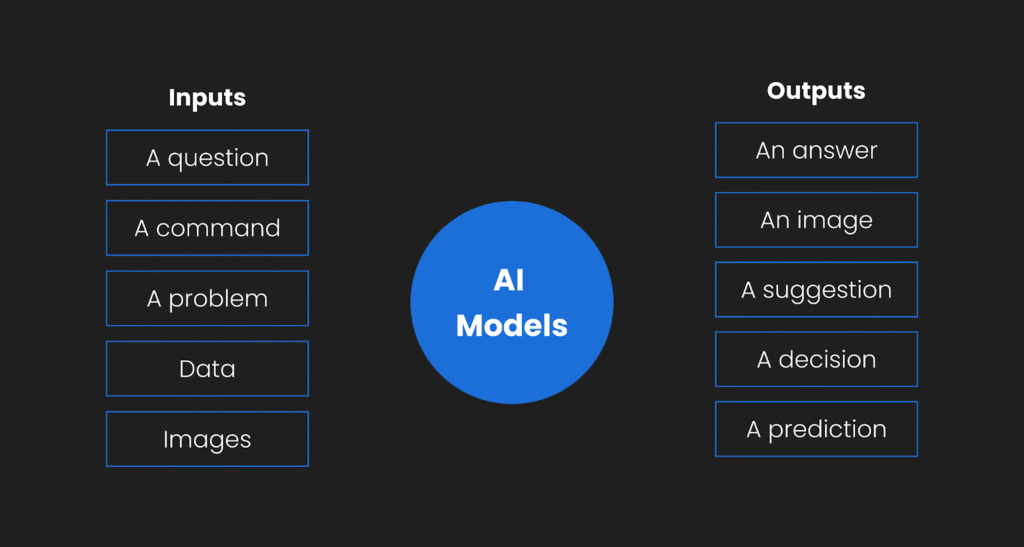

How Generative AI can be used in the design process

I believe that the use of generative AI in design practice is still in its infancy. We are at a stage where designers are still experimenting with how generative AI can be used in different parts of the design process. In this section, I’ll present a few examples of how AI could be used in various design phases, from research to ideation, content creation, code development, and as personal assistants.

Read also: How Generative AI is Revolutionizing Design Workflows

AI in the Research phase

- Use AI to find similar products, apps, and websites, and get an analysis of them.

- Use AI to find relevant articles, books, and content to learn more about.

Tools to try: ChatGPT, Claude

AI in the ideation phase

- Use AI as a thinking partner – Compare and evaluate different ideas, solutions, and concepts.

- Ask it how you can move forward in your project or for ideas of things you haven’t considered yet

Tools to try: ChatGPT, Claude

AI in the content generation phase

- Generative AI can be used to produce texts, images, videos, sound, and more. However, you still need to have an idea of the concept or context

Tools to try: ChatGPT & Claude (text), Pikalabs (video), HeyGen (video), Runway (video), Midjourney (video), Suno (music), Elevenlabs (voice/sound)

AI in the design-to-code phase

- Use AI to transform your design, sketch, or text description into functional code

Tools to try: Figma Make, Lovable, Claude Code, Replit, Cursor

AI as a personal assistant

- AI could be used as a personal assistant or agent, autonomously performing routine and repetitive tasks.

- For example, recording interviews, transcribing, and giving summaries.

Tools to try: Sana, n8n

My experimental AI-assisted design Workflow

Note: This is not a finalized workflow, and I have not yet used this process in any paid design-based projects, only in explorative AI projects. This is an experimental AI-assisted design workflow. I aim to learn and understand how, when, and where generative AI might be useful in a UX/UI design process.

Currently, my co-creative process with AI begins with manual planning and brainstorming of a design concept. After the initial planning, I utilize large language models such as ChatGPT or Claude to flesh out my ideas into proper documents and plans. At this stage, I may ask an LLM to identify missing parts of my plan, to help ask me questions, and to correct any spelling and grammar in my texts.

Once I have my idea and plan of what I want to create, I have experimented with using Figma Make to prompt GenAI with natural language input to generate a prototype for me. I have needed to iteratively improve the design by prompting Figma Make further, and once I feel satisfied, bored, or feel like I’ve hit a wall, I copy and paste the generated design into a Figma file, where I continue with manual iterations until I’m satisfied. To be honest, I don’t think any of the AI-generated designs have been anywhere near a finished product, but it has been cool to be able to generate functional “fun” prototypes.

If I want to create a functional prototype in code of my design, I use Claude Code to generate the first version of the code. Then, I move on to Cursor, where I iterate the design in smaller increments based on my judgments and tastes, using the built-in AI agent. When bored or feeling limited, I manually edit the code in VS Code, switching between AI-assisted coding sessions and manual coding sessions.

In summary, I am experimenting with Generative AI in various parts of my design process. Right now, I don’t feel it’s good enough to replace manual design work. But, I do find it very helpful for text processing and brainstorming in the early stages, before even opening Figma. I also feel like “vibe coding” and think it’s such a cool thing to play around with. I have noticed, though, that whenever I want to create a design that I actually want to publish somewhere as my own, I don’t use AI. I still use Figma to create things, sometimes from scratch or using my old designs as starting templates. I’m not sure if this means that AI isn’t up to par, or if I’m just so used to designing things manually that I can’t stop.

Research on Generative AI in Design Practice

AI in UI/UX Design is used for early-stage tasks, offering speed and breadth but requiring human oversight, policies, and better evaluation.

To find out more about how other people use generative AI in design practice, I recently did a scoping literature review of recent (2021-2025) research on generative AI in design practice, to explore the questions “What is known from existing literature about how AI can be used in interaction design practice to support design practitioners? And what are prominent research gaps for future research?“.

I searched Google Scholar and used the LLM-based chat in the Dia Browser to summarize and synthesize the papers in a table. Note that LLM summarizations and synthesis can hallucinate, but I scanned a total of 240 papers manually, and included 62 papers in total. Even if the LLM hallucinates now and then in the summarization of the contents, I think the general overview can still be useful.

This is what I found:

- How genAI is used in practice: Predominantly for research synthesis, ideation, briefs, personas, storyboard/journey mapping, and low–mid fidelity prototyping; limited impact on convergent, high-fidelity, and evaluation phases.

- Possibilities: Faster iterations, broadened exploration and creativity, scalable personalization, and mixed‑initiative co‑creation across multimodal workflows.

- Risks and challenges: Hallucinations, bias and homogenization, design fixation on AI outputs, weak controllability/context retention, privacy/IP concerns, and skill degradation, especially for juniors.

- Proposed guidelines: Keep humans-in-the-loop with verification; clarify policies and data governance; match tool to phase and use structured prompts; surface explainability and provide contextual controls and transparency.

- Future research directions: Build journey/flow-aware datasets and evaluation benchmarks; study fixation mitigation and transparency; develop multi‑agent UX toolkits and prompt frameworks; run longitudinal org/education studies on adoption and skills.

What I found most interesting was the concept of design fixation. Design fixation occurs when designers become fixated on an AI-generated output, which can negatively affect the creativity of design solutions and lead to generic designs. To combat this, some papers suggest that designers can switch up the AI tools they use, the prompts, and also include non-AI-assisted design sessions.

Research papers on AI in design & design fixation:

- How generative AI supports human in conceptual design (Chen et al., 2025)

- Designing Interactions with Generative AI for Art and Creativity (Hu et al., 2025)

- Investigating How Generative AI Affects Decision-Making in Participatory Design (Joshi et al., 2024)

- The Effects of Generative AI on Design Fixation and Divergent Thinking (Wadinambiarachchi et al., 2024)

Internet opinions on AI for UX/UI design

On discussions on Reddit, there seems to be a discrepancy between AI enthusiasts and skeptics. Scepticism comes from cases of AI being used to create the end solutions, with many designers claiming it just isn’t comparable to the quality of human designers. Enthusiasts, on the other hand, seem to emphasize AI as a tool and assistant for early-stage research, ideation, and UX writing.

Many designers, including me, are experimenting with using AI-assisted coding tools to create working prototypes of our designs. This is, to me, the most amazing application area. What if, in the future, designers could take their Figma mockups and prototypes and translate them into functional code using generative AI? This is where I stake my hopes and where I see a possible real change in the job dynamics of tech teams.

My current takeaways

Even with all the hype around these AI tools for UX/UI design, they are not nearly good enough to replace human design practice. This means that you, as a designer, especially if you’re a beginner or junior, still need to do the work yourself and judge AI outputs. However, AI can be used as an assistant, decision-support tool, or help with productivity along the way. But, do not rely on it to do the entire job.

Tools to try – Start exploring yourself!

If you want to start exploring with AI in the design process, I’d recommend starting with the following tools:

For AI-prompted UI design:

- Figma Make – Figma’s new AI prompting tool, where you can use natural language to generate design prototypes. You can copy the generated prototypes into Figma files to further manually edit them. Pretty cool stuff!

- Figma Sites

For AI-vibe coding:

- Claude Desktop

- Claude Code – An AI code assistant in the terminal, with access to your code files, which affords it contextual awareness of the code you are working with. The generated code from Claude code in the terminal is truly incomparable to what you get from general LLM desktop tools (e.g. ChatGPT & Claude Desktop).

- Cursor – Another AI code assistant tool with an AI agent built into an interface similar to VS Code. This tool has been created for developers working in teams with bigger code bases. Also really great, and better for more detailed edits compared to Claude Code.

Other related tools:

Links

- YouTube video: When Designers Start Shipping Real Code: Emmet Connolly from Intercom – This talk by Emmet Connolly includes many hands-on examples and inspiration on how you as a designer can start exploring with AI tools to build prototypes.

Stay tuned for updates on this page! Feel free to leave a comment if you’re experimenting with this as well.